12 February 2018

6011

20 min

5.00

Personalized Sending Time for Email Campaigns

Content

Searching for the best time of an email campaign to start is among the top priority areas of email marketing research. But despite this, there’s still no final solution found. In fact, the optimal time to read inbox emails is so personal matter that it’s difficult to give any common recommendations.

For example, a study conducted by Pure360 company (in russian only) showed that the peak period of delivered emails readability is between 5 and 9 pm. A MailChimp company, in its turn, presented the results of extensive research where the interval between 9 am and 14 pm is defined as optimal to send mailings. How did it turn out that the results are so different, and which of them is better to use in our business?

Some introductory reflections

The matter is that all the results of such publications are more or less artificial data. In fact, if we take a look at the findings of well-known studies it seems that about 24% of all opens are being done during the very first hour after the message was delivered. This way, a company that constantly starts mailings around the same time accumulates a high number of email opens at this time. It’s like there is nothing to worry about... until it goes to Open Rate and Click Rate analysis with reference to sending time.

If we set all opens at 100% when analyzing Open Rate with reference to time and then calculate the percent of opens for each hour, where the highest percentage will be detected? Not at the most active time but at the hour when mailings are usually sent (approximately 24% as arithmetic mean). Does it really reflect users' preferences of the best time to read inbox messages? No. With this approach, you can only detect the time when you usually start mailing campaigns. But to find out the time that would be really convenient for the subscribers it’s necessary to enhance your data by sending mailings at a different time of the day and days of the week. The following diagrams are presented to illustrate these reflections.

The company sends its mailings weekly, mostly on Wednesday and Friday at 15:00 and on Saturday at 10:00. The diagram below depicts the timing segmentation of opens for this company's campaigns. Darker colors indicate a higher percentage of opened emails.

On the diagram, we can see that the maximum part of opens coincides with the time of campaign's start. One could even think that company sends its mailings exactly when they are best read. It seems like a very good indicator of the optimal mailing time. But at the same time, a logical question arises: do company users really prefer this time to read emails? How did the company succeed to guess this moment? Let's check how the same users are reading mailings from other companies.

We have added the Open Rate of bulk mailings sent by other companies (that are using our system) to the same addresses data. The whole picture has changed completely. It turns out that users who (just as we assumed) prefer to read emails at 15:00 are reading emails at other times of the day too. They even prefer 12:00 in the afternoon.

I wonder would this picture radically change again if we could get the data on the open time of emails delivered at another moment? Can we rely on this data if we only have information, for example, about some parts of emails opened by each user? We like to think over the questions like this :)

Only the theoretical answer may exist here. As we know, natural processes are usually may be described by the principle of normal distribution. As for statistics, it says that a sample taken from a normal distribution process (with certain conditions, of course) reflects the common tendency very accurately. Therefore we hope that our case is not an exception from the laws of nature, so we can rely on some part of user’s history to find the common tendencies.

But having collected the resulting data we realized that it doesn’t make sense to work with common tendencies - we have to detail the problem. Detail it not even at the level of users segments by interests but even deeper down to every particular user.

1. Finding the personalized time using the maximum frequency method

The method we chose to start our research is very simple: we collect the history of the user's opens and then find the day of the week and time of the day when a user reads the messages more intensively.

It’s important to note that each user history was collected throughout our system, where everyone receives mailings from about 10 companies.

Let’s depict the behavior of some random user.

Each diagram point represents the frequency of email reading at the certain time of the day. The selected user has a wonderful story - a peak at 19 pm on Friday is clearly seen. If we send him emails an hour earlier we will hit the 18 pm point which is the 2nd peak, i.e. we are certainly found the most preferable period of time for this user.

In fact, users' behavior is not always so clear. There are users who have several peaks, or there is simply a poor history of email reading. In this case, this approach isn’t effective. Such a case is presented in the following diagram.

1.1. Testing the method and results

To test the maximum frequency method, we conducted an experiment similar to A/B testing. The test sample was split into two segments: experimental and control (we configure these filters using conditional and composite dynamic segments). A message was sent to the control segment at the usual time (15:00 for the actual company) while for the experimental group mailing was sent at each user’s individual time (based on algorithmic calculation). Naturally, segment testing was done in parallel, and both groups received the same email. In addition, we stratified the segments accordingly to indicators of their latest activity and type of user history.

1.2. Open Rate analysis

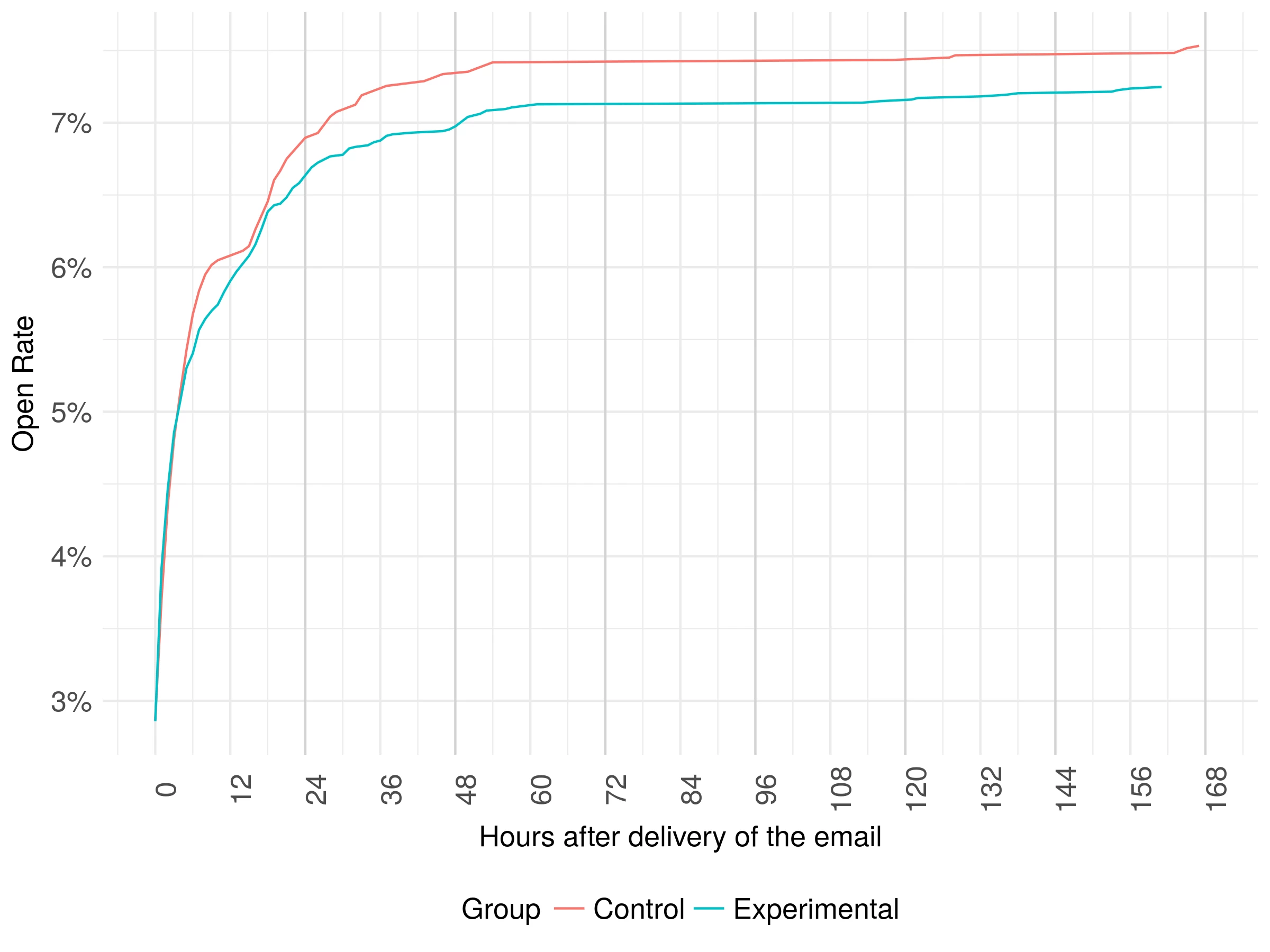

The results of test segment monitoring during one week were quite unexpected.

Testing indicates that users in the experimental segment read more emails than in the control group. Moreover, the χ2 criterion (that captures the results at different time intervals) asserts that outcomes are statistically significant. So, since the users of the test segment were in equal conditions, we can affirm that the result data is achieved exactly by choosing the time of campaign start.

| Cumulative Open Rate, % | |||||||

|---|---|---|---|---|---|---|---|

| Time after campaign start, hours | 1 | 3 | 12 | 24 | 48 | 72 | 168 |

| Control segment (discrete time) | 3,67 | 4,51 | 5,69 | 6,49 | 6,87 | 7,13 | 7,44 |

| Experimental segment (personal time) | 5,53 | 5,98 | 6,74 | 7,28 | 7,77 | 7,89 | 8,17 |

| Absolute difference | 1,86 | 1,47 | 1,05 | 0,79 | 0,9 | 0,76 | 0,73 |

| Relative difference | 50,68 | 32,59 | 18,45 | 12,17 | 13,10 | 10,66 | 9,81 |

An additional analysis of user activity in the first 24 hours after receiving the email allowed us to make a conclusion that we really succeeded to choose the best mailing time for the experimental segment. The next diagram shows the distribution of opens in the first 24 hours after email was delivered.

In the diagram we can see that:

- in the experimental segment, the density of Open Rate distribution is not blurred but concentrated close to the zero mark;

- in the control segment, 22% of all email opens in the first 24 hours were done in the very first hour (this is quite consistent with the studies above);

- in the experimental segment, 73% (!) of all the emails opens during 24 hours were done in the hour one (rate is 232% higher than for the control group).

In other words, users in the experimental segment were reading inbox emails immediately after delivery. In other words, they received the message at their most convenient time.

1.3. Click Rate analysis

In the experimental segment, Click Rate was increased by 35%. This growth was caused by clicks during the first hour after email delivery.

| Cumulative Click Rate, % | ||||

|---|---|---|---|---|

| Time after campaign start, hours | 1 | 3 | 8 | 24 |

| Control segment (discrete time) | 1,87 | 1,93 | 1,95 | 2 |

| Experimental segment (personal time) | 2,6 | 2,63 | 2,65 | 2,7 |

| Difference | 0,73 | 0,7 | 0,7 | 0,7 |

| CR relative increase | 39 | 36,3 | 35,9 | 35 |

1.4. Effect analysis

Let's take a look at the effect achieved depending on a period of time until the latest user activity. We’ll set one week period at a single interval of time and segment all the test group users into subsegments by a number of weeks that are passed since the moment of the latest activity. For example, the "less than 1 week" subgroup is composed of users that opened emails or clicked through links during the last week at the time of test mailing; "1 week" are those who showed activity last week, "2 weeks" - the week before, etc.

For this test, the diagram shows that we have achieved an effect for those users who have been active during the last 5 weeks. But conducting a lot of tests we concluded that on average, effect is reached for users with up to 2 months period of last activities.

1.5. Choosing the further direction

With the help of the frequency algorithm, we managed to increase the relative Open Rate by 10% and Click Rate by 35%. Moreover, users are reading emails at their most convenient time now.

This result motivated us to continue our studies. All in all, we can optimize the entire process of solving the problem relying on experience gained. And really, a lot of ideas and suggestions appeared immediately to improve this method.

The first weakness of the maximum frequency method is that it considers all the email opens as equal. Although in fact, the pattern of user activity may change from year to year or even from month to month. People’s living conditions are changing very quickly. Therefore, when it comes to actual user preferences finding, the old open rate is not as relevant and statistically valuable as the latest data.

2. Finding the personalized time using the maximum weight method

Let's start with an illustration of how user preferences are changing over time. We’ll draw a frequency diagram for some random user.

Everything is quite clear about this user. A peak on weekdays: on Tuesday from 16 to 17, and on Thursday from 13 to 14; on weekends - from 10 to 11 on Saturday.

Let's stretch this chart over one year of emails reading.

As we can see, user's preferences have changed. Moreover, the peak is completely different for all three graphs. That is, if we relied purely on user's history duration we would send him emails in absolutely different time; so it indicates the instability of an actual method.

The solution in this situation was additional weight for all emails that the user has read. Each email received its weight depending on its open date. The oldest emails have the minimal weight, while the latest got the maximum. Then, we sum up all the weights of opened emails for each day of the week and time of the day.

We have received the following diagram of weights for our user.

The result is a good description of actual user activity tendency during the last year, it was represented in the previous diagram too. In addition, the multimodality problem of the user frequency distribution is indirectly solved too because outcomes of the weight method almost invariably detect only one peak for each user.

2.1. Testing the method and results

Recommendations to compile a test sample and split it into experimental and control segments are just similar to those described in the maximum frequency method guide. Also, the testing was similarly conducted. The test sample was split into two segments: experimental and control. The control segment received an email at the usual time (15:00 for the current company); for the experimental segment, messages were sent individually to every user (each certain time was based on the algorithm results).

2.2. Open Rate analysis

Contrary to expectations, the maximum weight method didn’t increase the Open Rate.

In the experimental segment, Open Rate was lower than in the control one.

| Cumulative Open Rate, % | |||||||

|---|---|---|---|---|---|---|---|

| Time after campaign start, hours | 1 | 3 | 12 | 24 | 48 | 72 | 168 |

| Control segment (discrete time) | 2,87 | 4,81 | 6,08 | 6,9 | 7,34 | 7,42 | 7,53 |

| Experimental segment (personal time) | 2,86 | 4,86 | 5,9 | 6,64 | 6,97 | 7,13 | 7,26 |

| Difference | -0,01 | 0,05 | -0,18 | -0,26 | -0,37 | -0,29 | -0,27 |

| OR relative reduction | -0,34 | 1,04 | -2,96 | -3,76 | -5,04 | -3,91 | -3,59 |

The relative difference of 3.6% is not significant in these conditions. But the fact of a neutral outcome itself makes us doubt the algorithm's adequacy.

Despite this, Open Rate density during the first hour after email delivery in the experimental segment was higher than in the control one.

So, we still succeeded to find a convenient time for some users.

2.3. Click Rate analysis

In the previous method, we reached the 35% (!) increase in click rate alongside 10% Open Rate growth. A surprise - although we have 3.6% less Open Rate in experimental segment with this test, Click Rate in this group is 8.4% higher than in the control segment.

| Cumulative Click Rate, % | ||||

|---|---|---|---|---|

| Time after campaign start, hours | 1 | 3 | 8 | 24 |

| Control segment (discrete time) | 0,23 | 0,19 | 0,2 | 0,21 |

| Experimental segment (personal time) | 9,66 | 7,82 | 8,2 | 8,43 |

| Difference | 0,23 | 0,19 | 0,2 | 0,21 |

| CR relative increase | 9,66 | 7,82 | 8,2 | 8,43 |

What does such an ambiguous (at first sight) result speak about? It’s interpretation is the following: a convenient personal time much more affects the chance of an email link to be clicked than email chances to be opened. That is, we send the user a message right when he has a free minute. Maybe this is lunch break. Although the user can open his mailbox another time, he has more time exactly at this moment. So he can click all the links he would like to click at another time but he couldn’t. And most likely he will forget to return back to the email and click the links later.

So, we concluded that weigh algorithm still doesn’t take something important into account and requires further upgrade, although it looks quite logical and gained a relative 10% increase in clicks rate. Let’s move on.

3. Finding the personalized time using the peak activity method

At this stage it’s clear that focusing on Open Rate exclusively is just an intermediate step. The point is that our priority objective is user conversion after clicking to the client's website through the link embedded in an email.

This way, we came to the conclusion that in addition to the email open rate, many additional factors must be taken into account. Therefore, the preferred time for mailings to start is described by the more complex function including a set of variables.

Moreover, the very definition of user’s preferred mailing time is changing now.

Previously we determined the preferred time as the time of highest readability. But now we can say that the preferred time to send a message is the moment of the user's peak activity.

In the table below we presented the list of parameters, we used for the method of peak activity. A measuring scale is unified for all parameters.

| Denotation | Parameter |

|---|---|

| RW (Recency Weight) | Recency Weight |

| OW (Open Weight) | Open Weight |

| CW (Click Weight) | Click Weight |

| OT (from delivery to Open Time) | Reaction time (from delivery to Open Time) |

| CT (from open to Click Time) | Response time (from delivery to Click Time) |

| SP (Spam Penalty) | Spam complain penalty |

The mathematical component of the method turned out to be extensive. Therefore, we will not represent the whole formula here. Just several components are enough to have a common idea of how does this method work.

Each user’s action has a certain indicator of limitation. The limitation weights distribution type is controlled by the tr parameter. This parameter may be used to determine the duration of the latest period of time which we consider the priority in the user history.

In addition to the limitation weight, every action (email open, click through the email link or spam complaint) has its own activity weight. These weights are described by more complex functions with several variables (from delivery to open time, from open to link click time, reopen email, intervals between repeated clicks through links in the same email, sequence of opens and clicks, etc).

3.1. Algorithm analysis

Although we had certain hopes for this method, it didn’t show any significant result.

The pitfall of all the complicated methods is that’s very difficult to configure them accordingly to some particular problem solution. Just like with musical instruments - the more sophisticated it is, the harder is to tune them. In our case, we need a detailed review of the weights calculating system. We believe that complex algorithm should describe the behavior of users in the best way, taking into account all the possible aspects.

4. Vector of our work

At the actual stage of our research, we faced the paradox: the simplest algorithm is the most effective. Now we are working in several directions:

- we redesign and test the algorithms of the highest activity and maximum weight;

- We solve the “cold start” problem finding the optimal time for users that have a short history (we define similar users for new subscribers and then base the time settings on their preferences);

- we combine a personalized time algorithm and frequency recommendation engine (available in Rusian) in the united system.

5. Conclusions

Contrary to popular belief, mailing time optimization is not a myth but a reality. The essence is that an actual task cannot be solved by common recommendations, it requires the personalized algorithms development.

Testing the algorithms we succeeded to increase the Open Rate by 5%-30% and Click Rate by 8%-60%. A very important fact is that we significantly increased the Click Rate because leading client to the website is much more important than getting a high Open Rate.

You need to understand that offer in your email is the key to any mailing campaign success. Personalized time is not a core solution but just a component of the of email marketing optimisation sophisticated mechanism. This is a gear to spin together with all other analytic methods. Only by having the complex approach to the email marketing strategy streamlining we can achieve the impressive results.